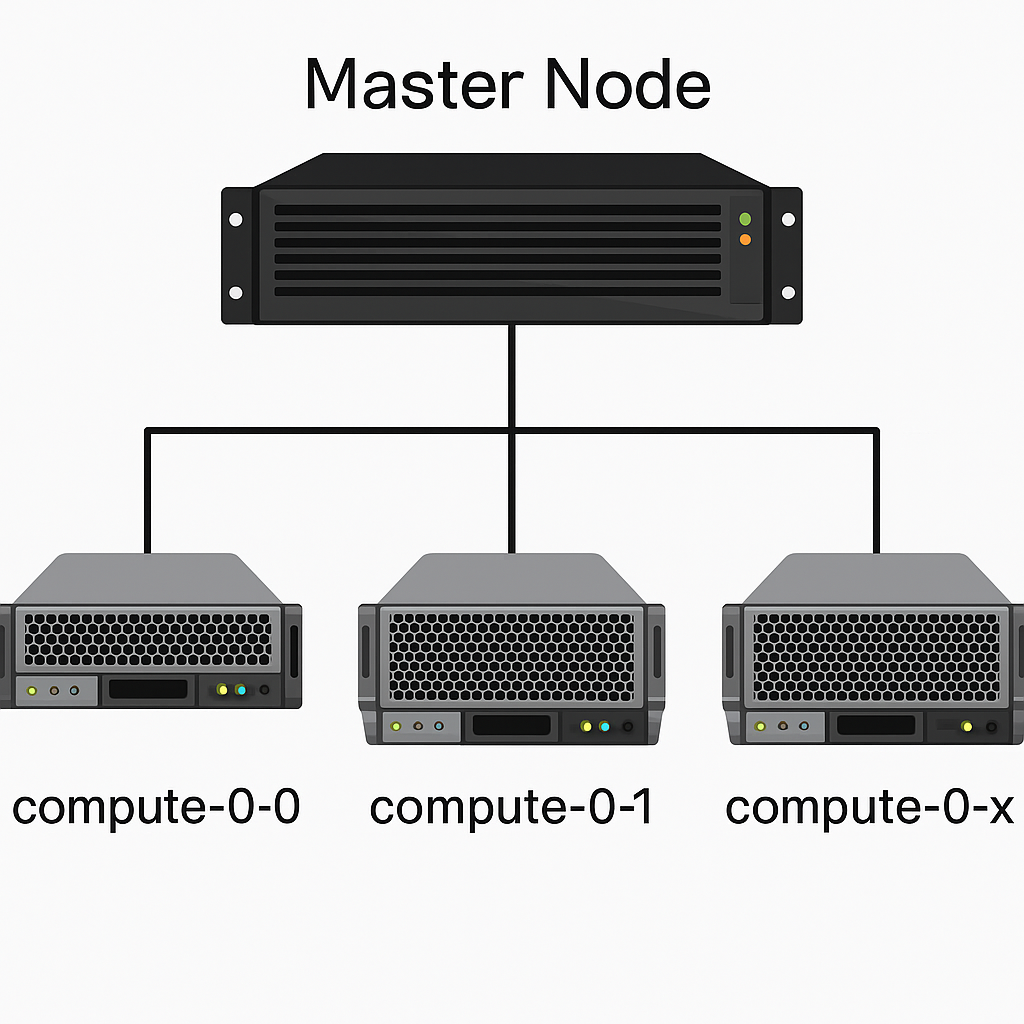

EEHPC Cluster Details

The EEHPC Cluster is hosted by LEAP Lab in the Electrical Engineering building, Indian Institute of Science (IISc).

GPU Resource Availability

| Node | Number of GPUs | GPU Memory per GPU |

|---|---|---|

| compute-0-0 | 2 | 16 GB |

| compute-0-1 | 2 | 16 GB |

| compute-0-2 | 2 | 16 GB |

| compute-0-3 | 2 | 16 GB |

| compute-0-4 | 2 | 16 GB |

| compute-0-6 | 3 | 11–16 GB |

| compute-0-8 | 2 | 24 GB |

| compute-0-5 | 3 | 48 GB |

| compute-0-7 | 2 | 48 GB |

| compute-0-9 | 3 | 48 GB |

Queue and GPU Resource Usage

| Queue Name | GPU Memory Eligibility | Max Jobs per User | Max Time Limit | GPU Usage Allowed |

|---|---|---|---|---|

| all.q (default) | None | N/A | N/A | No |

| short-gpu.q | Up to 24 GB | 6 | 4 hours | Yes |

| gpu.q | Up to 16 GB | 6 | 2 days | Yes |

| med-gpu.q | 24–48 GB | 3 | 4 days | Yes |

| long-gpu.q | 48 GB | 3 | 7 days | Yes |

Note:

By default,all.qis launched and it will not use GPU resources.

Disk Storage Details

-

We now have four disk spaces:

/export(roughly equivalent to/home),/data1/,/data2/, and/data3/

with approximately 51 TB, 28 TB, 28 TB, and 16 TB of storage, respectively. -

The data read/write speed hierarchy is:

/export>/data1/»/data2/~/data3/ -

Your primary working directory should be

/home/(/export),

as it offers the largest storage and fastest speed. -

Use

/data2/and/data3/for large, infrequently used files.

Move inactive data out of/home/to these locations. -

The exact usage guidelines for

/data1/,/data2/, and/data3/

will be shared soon. Access to these directories will be granted on request,

based on your need for additional storage.

Virtual Environments

-

A common base conda environment should automatically be visible when you log in.

If you don’t see'base'next to your username after login, please contact the admin. -

For GPU jobs, two pre-configured environments named

pytorch2andpython310are available.

For example, use the following command to activatepytorch2:conda activate pytorch2 -

Pre-installed packages:

base- Python: 3.13.5

matplotlib— 3.10.0numpy— 2.1.3pandas— 2.2.3scikit-image— 0.25.0scikit-learn— 1.6.1scipy— 1.15.3

pytorch2- Python: 3.12.0

huggingface-hub— 0.34.3librosa— 0.11.0matplotlib— 3.10.5numpy— 2.1.2pandas— 2.3.1pytorch-lightning— 2.5.2scikit-learn— 1.7.1scipy— 1.16.1soundfile— 0.13.1tensorboard— 2.20.0torch— 2.6.0+cu124torchaudio— 2.6.0+cu124torchmetrics— 1.8.1torchvision— 0.21.0+cu124tqdm— 4.67.1transformers— 4.55.0

python310- Python: 3.10.18

librosa— 0.11.0matplotlib— 3.10.5numpy— 2.1.2pandas— 2.3.1pytorch-lightning— 2.5.2scikit-learn— 1.7.1scipy— 1.15.3soundfile— 0.13.1tensorboard— 2.20.0torch— 2.6.0+cu124torchaudio— 2.6.0+cu124torchmetrics— 1.8.1torchvision— 0.21.0+cu124

-

Adding more packages

if you need any extra package on top of the existing packages in one of these 3 preinstalled environments(assume

pytorch2), then you can simply do:conda activate pytorch2 pip3 install <package_name>Doing so installs the package in pip3 path of the

pytorch2environment, inside thats specific user home path of/home/<user>/. Hence, the pachage will work only from that user account. -

Need environment with different

python,torchversionsIf you need an environment because of specific

pythonortorchversion requirements, then create a new conda environment itself (refer: Conda Environment Management Guide). Note that, this new environemnet will only be accessible by that specific user, unless explicitly shared to another user.

Shared Storage Spaces

To avoid redundancy and ensure efficient use of disk space, the following shared directories have been set up for common use across users:

-

Datasets Directory

Store all datasets in the shared location:

/home/leapers/dataPermissions:

- Any user can write into it.

- No user can delete it.

- If

user1creates a directorydataset1inside the path, any other user (user2) can read it, but cannot write/deletedataset1unless permission is explicitly given byuser1.

Usage:

- As a user (

user1), create a directory, e.g./home/leapers/data/ESC_dataset, and keep the ESC dataset inside it. - Do not create

/home/leapers/data/user1/ESC_dataset, as other users cannot see it easily and may end up downloading the ESC dataset again.

-

Feature Storage

Extracted features should be stored in:

/home/leapers/featuresPermissions:

- Any user can write inside it.

- No user can delete the folder.

- If

user1creates a directory inside the path, any other user (user2) can read it, but cannot modify/delete that directory unless permission is explicitly given byuser1.

Usage:

- As a user (

user1), create a directory called/home/leapers/features/user1. - Put your features inside a specific feature-named directory, e.g.

/home/leapers/features/user1/librosa_features_ESC/<features>.npy

- HuggingFace Cache

HuggingFace model weights are now automatically stored in:

/home/leapersYou do not need to change any settings — this is handled automatically.

-

Model Weights

Your own trained model weights should be saved in:

/home/leapers/weightsPermissions:

- Any user can write inside it.

- No user can delete the folder.

- If

user1creates a directory inside the path, any other user (user2) can read it, but cannot modify/delete that directory unless permission is explicitly given byuser1.

Usage:

- As a user (

user1), create a directory called/home/leapers/weights/user1. - Put your trained/finetuned/other model weights inside a specific weight-name directory, e.g.

/home/leapers/weights/user1/finetune_ResNet_CIFAR10/resnet_ft.pt

With the above shared spaces in place, your personal /home/username/ directory should be reserved only for:

- Code

- Logs

- A few intermediate results

Please avoid storing large datasets or model weights in your home directory.

Warning

Any user who violates the rules puts all other user jobs under crash-risk.

When identified, we will follow a two-tier warning system:

- Yellow flag: Stern warning

- Red flag: User privileges removed or restricted